Over the past few years, my team has moved from waterfall process to agile and now DevOps.

For organizations deploying new software in production several times a day, one of the most challenging transformation issue is the need to revolutionize testing. In an era of continuous deployment and updates, there’s no time to have QA teams identify a problem and kick it back to the developers. So times are gone when you were testing you app after the development and before going in production.

During this journey (read Our long journey towards DevOps) I’ve come up with a very simple framework that has changed my vision of testing (my main mission being product manager).

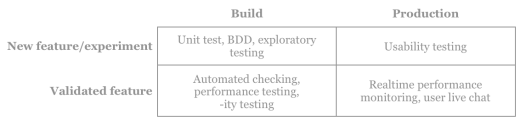

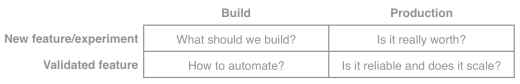

From the testing perspective of a feature, we try to answer to 4 different questions related to its maturity and its stage in the product lifecycle. Typically maturity is about the feature being an experiment or a validated functionality, whereas stage in the product lifecycle relates to the feature being actively developed or in production and maintenance stage.

This is basically the testing matrix:

1) What should we build?

At this stage, we get prepared for the coming sprint and we analyse the new features to be developed through the user stories. Each story describes the intent of the feature. For example: “as a user, I want to be able to import existing test cases from excel sheet into Hiptest”.

Ok fine but what should we build exactly?

This is where I’ve found Behavior Driven Development - BDD - a very powerful approach over the past few years. It enables to align the team on the definition of what we should build. All the stake holders (tester, Devs, Product Owner, marketing) collaborate on:

- product acceptance criteria - the examples,

- business acceptance criteria - the assumptions we want to validate.

That’s the shift left. We define tests before building the features.

Guess what? We use Hiptest for BDD :). Eat your own dog food as they say.

Then, our dev team develops the feature AND the unit tests. We perform some exploratory testing and deploy the feature in production.

2) Is it worth?

Now we have the feature (or prototype) in the hands of real users. This phase is about measuring wether or not the business assumptions are met. Whenever the answer is no, then just throw away the code together WITH the tests and iterate again…

A good way to test the assumption is by measuring the change in behavior. For example: “does the import feature enables to increase the activation rate by 10%?” Measuring change in behavior is most effective way I've found to evaluate the impact of a feature.

Monitoring live customer behavior can also give us a lot of insight about the usability of our app. A button that is almost never clicked may be at the wrong place, a pop-up window closed after 5 seconds may need a more entertaining content, or shorter phrases.

So the biggest part during this first 2 steps is about business discovery (with BDD) and business validation (observe & measure).

Testing is not about the product but about business AND the product that supports it.

3) How to automate?

Experiment was successful so let’s make this feature a first citizen class of our product. Now it is time to invest more in testing to ensure the service is delivered at scale: automate the BDD tests, do some performance testing and other -ity testing (security)

More importantly, tests should be integrated in the continuous integration process. They will give a rapid feedback on unanticipated side effects of changes. Speed is one of the most important attributes of tests to consider when evaluating their value.

4) Is it reliable and does it scale?

The critical thing is that while a server may go down, the application can’t. Scale and reliability are the big things, and testing has to be in real time.

That’s the shift right

We typically monitor two characteristics: performance and usability.

We use tools like Appsignal to monitor our app and gain insight about its performance continuously. It helps to see how a feature behaves right after its deployment to production.

Tuning performance of a feature for days before deployment may be a total waste if it did not meet customer expectations first. It is better to launch a feature fast, test its behavior in production and then adjust it once all assumptions made during development are validated. It's the "Experiment, test and learn" motto.

As importantly, we also have a real-time user feedback (with live chat) to raise issues that might not be detected by the previous tools. Sometimes, wrong behaviors might not be due to errors in the code, but simply to a bad UX or will only appear when a certain mass of data is reached. For example at the beginning of Hiptest, we did not specify any kind of sort for displaying the folders and we did not yet have enough scenarios to detect the issue but users saw the problem when they started having hundreds of scenarios. There was no errors raised by the application, just annoyed users until we forced an alphabetical sort of scenarios

Testing Swing

The biggest part of testing was previously performed after development activities and before going in production. No matter how hard you resist, this time is over.

Nowadays with the rise of DevOps and the acceleration of deployment cycles, testing has moved upstream and downstream. This is the testing swing!